Abstract

This work addresses navigation in crowded environments by integrating goal-conditioned generative models with Sampling-based Model Predictive Control (SMPC). We introduce goal-conditioned autoregressive models to generate crowd behaviors, capturing intricate interactions among individuals. The model processes potential robot trajectory samples and predicts the reactions of surrounding individuals, enabling proactive robotic navigation in complex scenarios. Extensive experiments show that this algorithm enables real-time navigation, significantly reducing collision rates and path lengths, and outperforming selected baseline methods. The practical effectiveness of this algorithm is validated on an actual robotic platform, demonstrating its capability in dynamic settings.

Overview

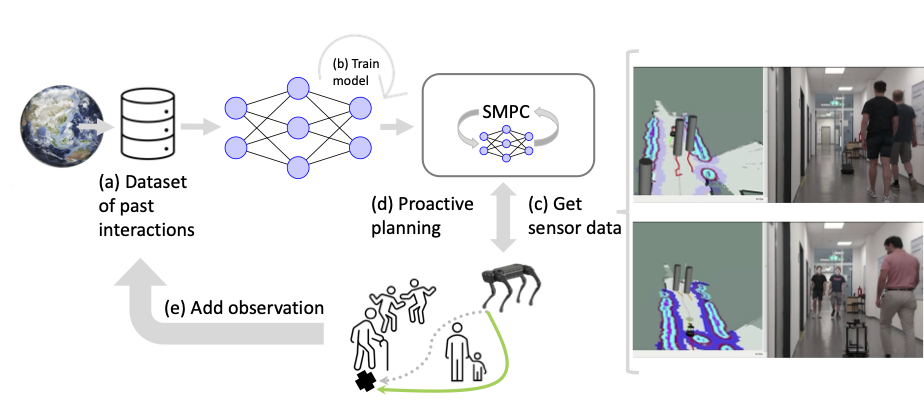

This work explores the potential of generative models, which are trained on human crowd videos, for cooperative robot action planning. These generative models, we believe, can offer a solution to the "robot freezing" problem by enabling robots to generate intuitive, human-like behaviors, promoting more natural robot-human cooperation. However, applying these models to crowd navigation presents challenges, including processing continuous actions, handling data multi-modality, and conditioning on future outcomes. Additionally, a policy trained solely on human videos won’t match a robot’s unique kinematic and dynamic constraints, and the datasets lack environment representations that robots can easily interpret.

To address these challenges, we propose to combine generative modelling with SMPC: (a) The dataset comprises recordings of crowd dynamics. (b) Using this dataset, a generative model is trained to forecast future position of individuals. (c) The robot, equipped with a 3D camera and 2D LiDAR sensor, detects and tracks pedestrian positions, and generates a cost map to avoid obstacles. On the left side of the images, four distinct trajectories are shown: agents’ past paths (red), predicted future paths (orange), the robot’s planned trajectory (green), and the robot’s global plan (thin red line). Cylinders represent the positions and outlines of humans. (d) The model predictive control framework, enhanced by the generative model, plans proactively robot trajectories that mimic human movements. (e) New observations can be added to the dataset, allowing the approach to scale with more data.

Sampling based Planning

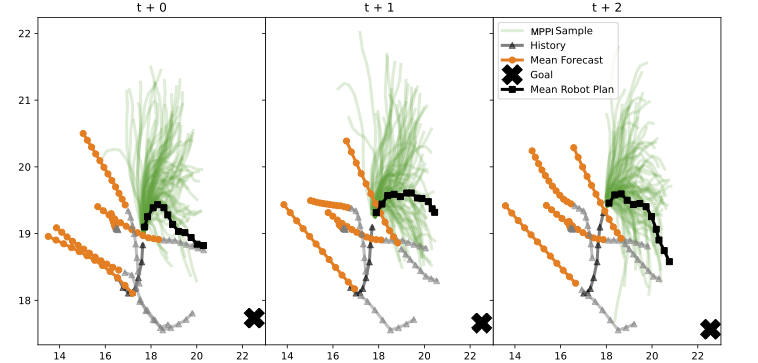

This study examines the Model Predictive Path Integral (MPPI) method within SMPC. MPPI is distinguished by its sampling-based approach, enabling the planning of multiple potential actions or ‘samples’ for the collaborative robot. During the planning process, these samples are concurrently evaluated by a goal-conditioned generative model. Efficiency in this approach is enhanced through the use of programming frameworks known for their parallel computing capabilities, which facilitate the rapid analysis of the extensive samples generated by the MPPI method, thereby enabling more effective and robust decision-making.

Counterfactual Reasoning

By incorporating goal conditioning, we limit the robot's ability to exploit human reactions, as individuals follow their own objectives, reducing adaptability to avoid collisions of the robot. A goal-conditioned generative model can also effectively direct the robot towards its goal, offering insights into both the human-like nature and efficiency of navigating in a crowd.

SMPC and goal-conditioned generative models also provide a straightforward way for counterfactual reasoning. We can compare one marginal scene where the model is not integrated into the prediction with many scenes that are conditioned on the sampled robot plan . By comparing the different types of predictions, it can be calculated how much the robot plan influences the scene. We call this value the Social Influence Reward (SIR).

With SIR, it is possible to tune how confidently the robot should move. If it is set correctly, a balanced plan can be found, as presented in the following GIF, which is produced by rendering our GYM environment based on real-world human movements.

If SIR is set too high, the robot exhibits robot-freezing behavior.

If SIR is set too a negative value, the robot exhibits an annoying behavior.

Real World Demonstrations

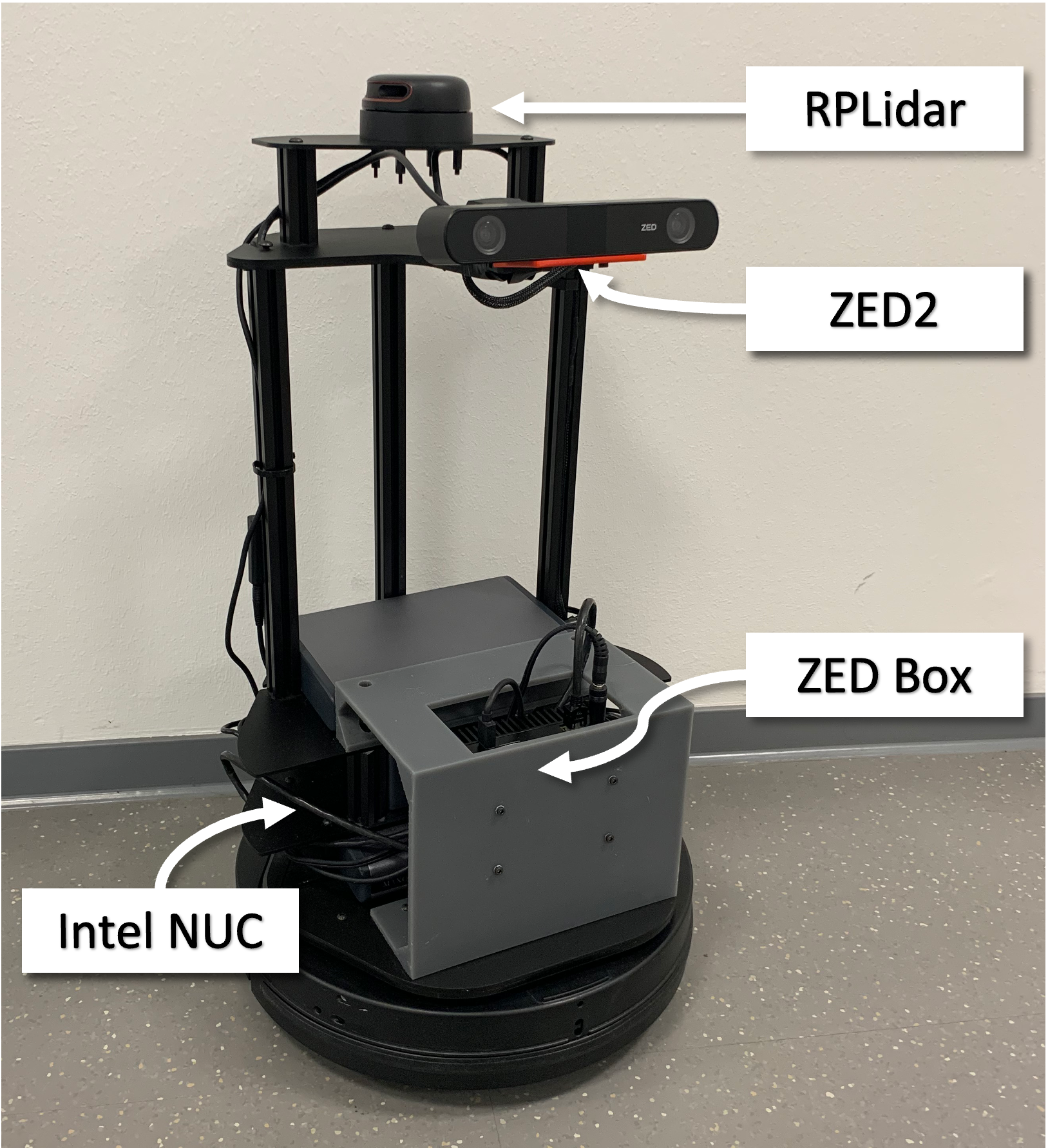

To accomplish real-world locomotion tasks, the proposed algorithm is implemented on the mobile robot platform LoCoBot.